Continue Notes

Table of Contents

1. Continue Configuration

-

In the

Primary Side Bar, click on the Continue Extension Icon.

-

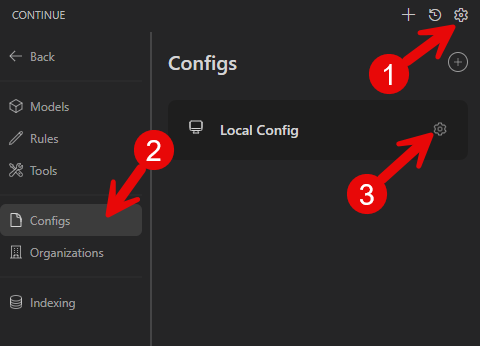

Now follow the numbered steps in the screenshot to open the config file:

-

The

config.yamlfile forContinuewill appear in the editor window. -

Create/Edit a

yamlconfig. Reference is here:Example YAML config file

name: Local Ollama Assistant version: 1.0.0 schema: v1 models: - name: Ollama-CodeLlama:34b (1) apiBase: http://192.168.1.53:11434/ provider: ollama model: codellama:34b (2) roles: - autocomplete - chat - edit - apply defaultCompletionOptions: temperature: 0.3 - name: Ollama-DeepSeek-Coder-R2-16b apiBase: http://192.168.1.53:11434/ provider: ollama model: deepseek-coder-v2:16b roles: - autocomplete - chat - edit - apply autocompleteOptions: debounceDelay: 350 maxPromptTokens: 1024 onlyMyCode: true defaultCompletionOptions: temperature: 0.3 - name: Ollama-Phi4 apiBase: http://192.168.1.53:11434/ provider: ollama model: phi4:latest roles: - autocomplete - chat - edit - apply autocompleteOptions: debounceDelay: 350 maxPromptTokens: 1024 onlyMyCode: true defaultCompletionOptions: temperature: 0.3 - name: Ollama-Qwen3:30b apiBase: http://192.168.1.53:11434/ provider: ollama model: qwen3:30b roles: - autocomplete - chat - edit - apply autocompleteOptions: debounceDelay: 350 maxPromptTokens: 1024 onlyMyCode: true defaultCompletionOptions: temperature: 0.3 - name: Ollama-Qwen3-Coder:30b apiBase: http://192.168.1.53:11434/ provider: ollama model: qwen3-coder:30b roles: - autocomplete - chat - edit - apply autocompleteOptions: debounceDelay: 350 maxPromptTokens: 1024 onlyMyCode: true defaultCompletionOptions: temperature: 0.3 context: - provider: code - provider: docs - provider: diff - provider: terminal - provider: problems - provider: folder - provider: codebase prompts: - name: check description: Check for mistakes in my code prompt: | Please read the highlighted code and check for any mistakes. You should look for the following, and be extremely vigilant: - Syntax errors - Logic errors - Security vulnerabilities1 Friendly Model name 2 Actual Model name -

Modify the models section of the config to reflect the LLMs installed in

Ollama.Refer to the Ollama-Notes doc to get a list of installed models.